Who It's For

Agenta is for teams building reliable AI applications with large language models. This includes developers, product managers, and experts. It helps them all work together, even if they don't code. The tool ensures AI apps are stable and trustworthy for everyone.

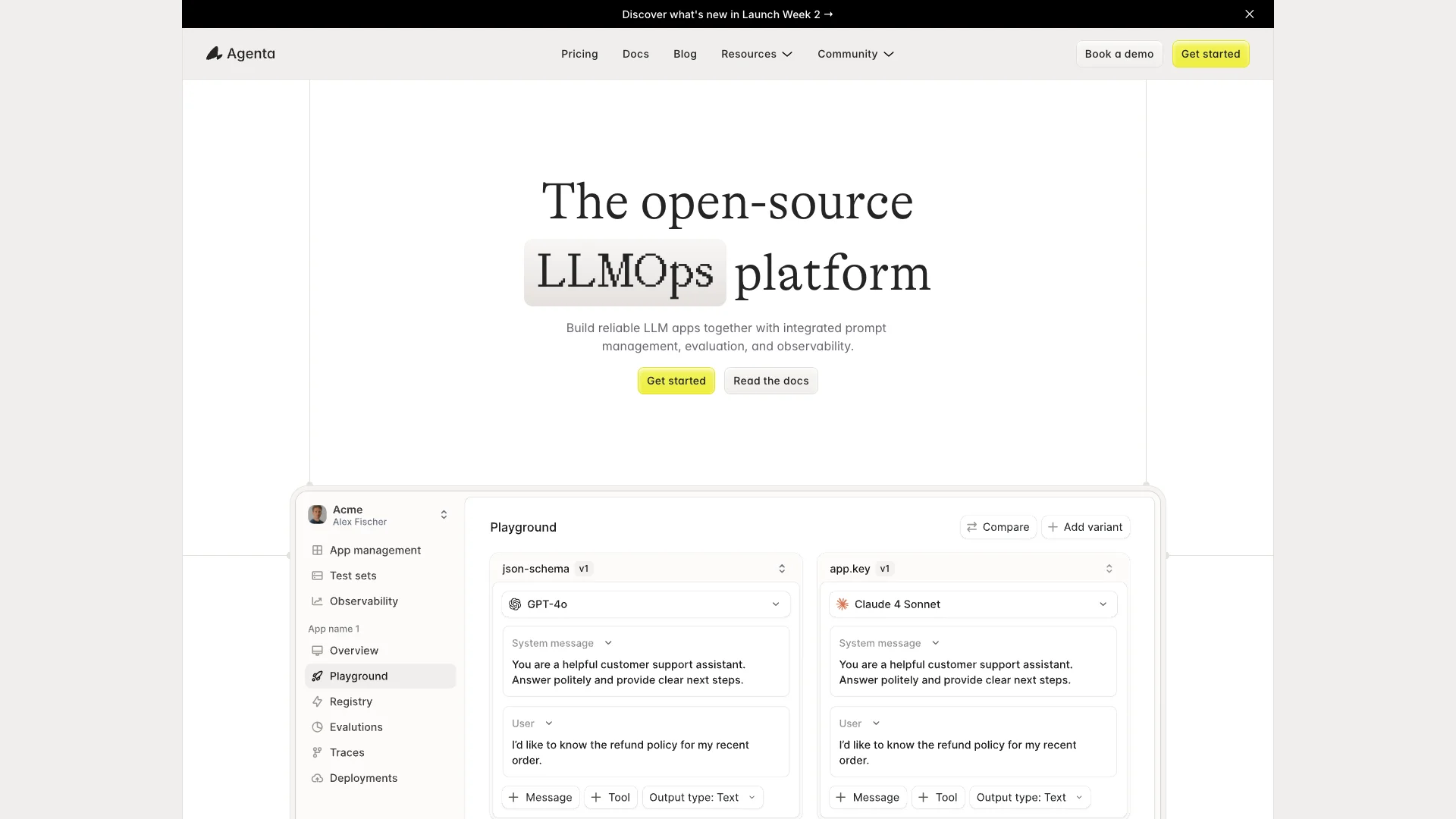

What You Get

You get a central platform to manage your AI app development. Easily test different prompts and models side-by-side. Track changes and measure AI performance. The platform includes tools for automated and human testing, plus ways to monitor your AI in production and gather user feedback.

How It Works

Agenta brings all your AI tasks into one system. You can compare ideas quickly and track every step your AI takes to find problems. Non-developers can safely experiment with prompts using a simple interface. This allows teams to iterate faster and ship dependable AI products.